Principal Component Analysis

A very popular method in shape modelling is Principal Component Analysis (PCA). PCA is closely related to the Karhunen-Loève (KL) expansion. It can be seen as the special case where the Gaussian Process is only defined on a discrete domain (i.e. it models discrete functions) and the covariance function is the sample covariance estimated from a set of example data. In this article, we will explain the connection between the PCA and the KL expansion in more detail, and discuss how PCA can be used to visualise and explore the shape variations in a systematic way. This can give us interesting insights about a shape family.

The Karhunen-Loève expansion in the discrete case

We have seen that the KL expansion allows us to write a Gaussian Process model as

Here, are the eigenfunctions/eigenvalue pairs of an operator that is associated with the covariance function . To fully understand this, advanced concepts from functional analysis are needed. However, if we restrict our setting to discrete deformation fields, we can understand this expansion using basic linear algebra only. Recall from Step 2.2 that if we consider deformation fields that are defined on a discrete, finite domain, we can represent each discretised function as a vector and define a distribution over as

is a symmetric, positive semi-definite matrix and hence admits an eigendecomposition:

Here, refers to the -th column of and represents the -th eigenvector of . The value is the corresponding eigenvalue. This decomposition can, for example, be computed using a Singular Value Decomposition (SVD).

We can write the expansion in terms of the eigenpairs :

It is easy to check that the expected value of is and its covariance matrix . Hence, we have that as required. Interpreting the eigenvectors again as a discrete deformation field, we see that this corresponds exactly to a KL expansion.

The Principal Component Analysis

PCA is a fundamental tool in shape modelling. It is essentially the KL expansion for a discrete representation of the data, with the additional assumption that the covariance matrix is estimated from example datasets. More precisely, assume that we are given a set of discrete deformation fields , which we also represent as vectors , . Note that, as the vector represents a full deformation field, is usually quite large. As mentioned before, PCA assumes that the covariance function is estimated from these examples:

where we defined the data matrix X as , and is the sample mean .

We note that in this case, the rank of is at most , which is the number of examples. This has two consequences: first, it allows us to compute the decomposition efficiently, by performing an SVD of the much smaller data matrix . Second, has in this case only non-zero eigenvalues. The expansion reduces to , which implies that any deformation can by specified completely by a coefficient vector .

Using PCA to visualise shape variation

In PCA, the eigenvectors of the covariance matrix are usually referred to as principal components or eigenmodes. The first principal component is often called the main mode of variation, because it represents the direction of highest variance in the data. Accordingly, the second principal component represents the direction that maximizes the variance in the data under the constraint that it is orthogonal to the first principal component, and so on. This property allows us to systematically explore the shape variations of a model.

We can visualise the variation represented by the -th principal component by setting the coefficient and and by drawing the corresponding sample defined by

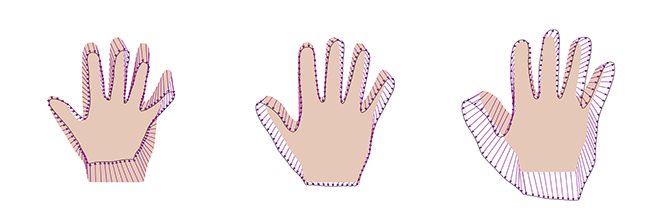

Typically, is chosen such that , which corresponds to a deformation that is 3 standard deviations away from the mean. Figure 1 shows the shape variation associated to the first principal component for our hand example.

Figure 1: the shape variation represented by the first principal component of the hand model, where the hand on the left shows a deformation with , the middle hand shows the mean deformation () and the hand on the right the deformation with .

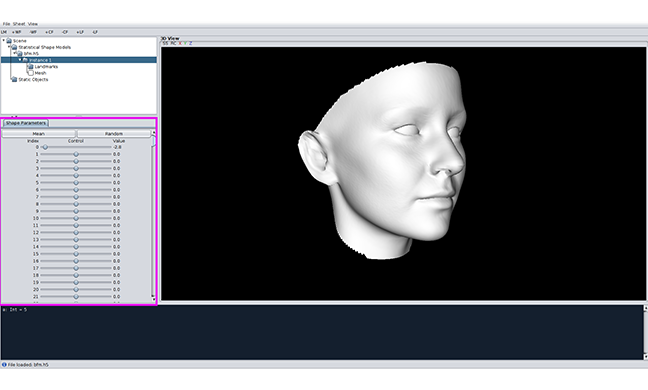

In Scalismo, visualising these variations is even simpler. The sliders that you can see in Figure 2 correspond to the coefficients in the above expansion and can be used to interactively explore the principal shape variations.